Pod Lifecycle and Restart Policies

Pods go through various states defined by the system throughout their lifecycle.

| State | Description |

|---|---|

| Pending | The API Server has created the Pod, but one or more container images within the Pod have not been successfully created, including the process of downloading images. |

| Running | All containers within the Pod have been created, and at least one container is in a running, starting, or restarting state. |

| Succeeded | All containers within the Pod have exited successfully, and no further restarts will occur. |

| Failed | All containers within the Pod have exited, but at least one container has exited with a failure status. |

| Unknown | The status of the Pod cannot be retrieved for some reason, possibly due to network communication issues. |

Pod Restart Policies

The RestartPolicy for Pods is applied to all containers within the Pod and is solely judged and restarted by kubelet on the Node where the Pod resides. When a container exits abnormally or fails a health check, kubelet takes appropriate action based on the RestartPolicy setting.

The Pod’s RestartPolicy includes Always, OnFailure, and Never, with the default value being Always.

- Always: kubelet automatically restarts the container when it fails.

- OnFailure: kubelet automatically restarts the container when it terminates with a non-zero exit code.

- Never: kubelet never restarts the container regardless of its running state.

The time interval for kubelet to restart a failed container is calculated by multiplying sync-frequency by 2n, for example, 1, 2, 4, 8 times, with a maximum delay of 5 minutes, and resetting this time 10 minutes after a successful restart.

The Pod’s RestartPolicy is closely related to its control method. Currently available controllers for managing Pods include ReplicationController, Job, DaemonSet, and directly through kubelet management (StaticPod). The restart policy requirements for each controller are as follows:

- ReplicationController and DaemonSet: Must be set to Always to ensure continuous operation of the container.

- Job: Set to OnFailure or Never to ensure that the container is not restarted after execution completes.

Kubelet automatically restarts Pods when they fail, regardless of the RestartPolicy setting, and does not perform health checks on Pods.

Pod Health Checks

Health status checks for Pods can be performed using two types of probes: LivenessProbe and ReadinessProbe.

- LivenessProbe ProbeUsed to determine if the container is alive (Running state). If the LivenessProbe probe detects that the container is unhealthy, kubelet kills the container and takes appropriate action based on the container’s restart policy. If a container does not contain a LivenessProbe probe, kubelet assumes that the LivenessProbe probe always returns “Success.”

- ReadinessProbe ProbeUsed to determine if the container has finished (Ready state) and can receive requests. If the ReadinessProbe probe detects a failure, the Pod’s status will be modified. The Endpoint Controller will remove the Pod’s Endpoint from the Service’s Endpoint if it contains the container.

Probe Configuration Parameters

| Probe Parameter | Description |

|---|---|

| initialDelaySeconds | The number of seconds after the container starts before the probe is initiated. |

| periodSeconds | The frequency (in seconds) at which the probe is executed. Default is 10 seconds. Minimum is 1. |

| timeoutSeconds | The number of seconds after which the probe times out. Default is 1 second. Minimum is 1. |

| successThreshold | The minimum consecutive successes for the probe to be considered successful. Default is 1. |

| failureThreshold | The number of retries before considering the probe a failure. Default is 3. Minimum is 1. |

HTTP Probe Configuration

For HTTP probes, kubelet sends an HTTP request to the specified path and port to perform the check. kubelet sends the probe to the Pod’s IP address unless the optional host field overrides it. If the scheme field is set to HTTPS, kubelet sends an HTTPS request skipping certificate validation. In most cases, you do not want to set the host field unless your pod depends on virtual hosts. If your pod listens on 127.0.0.1 and the hostNetwork field of the pod is set to true, then host in httpGet should be set to 127.0.0.1. If your pod depends on virtual hosts, which is a more common scenario, you should not use host and should instead set the Host header in httpHeaders.

Refer to the official documentation for more details.

Kubelet regularly executes LivenessProbe probes to diagnose the health of containers. LivenessProbe has the following three modes:

1.ExecAction

Executes a command within the container. If the command returns a code of 0, the container is considered healthy.

Example:

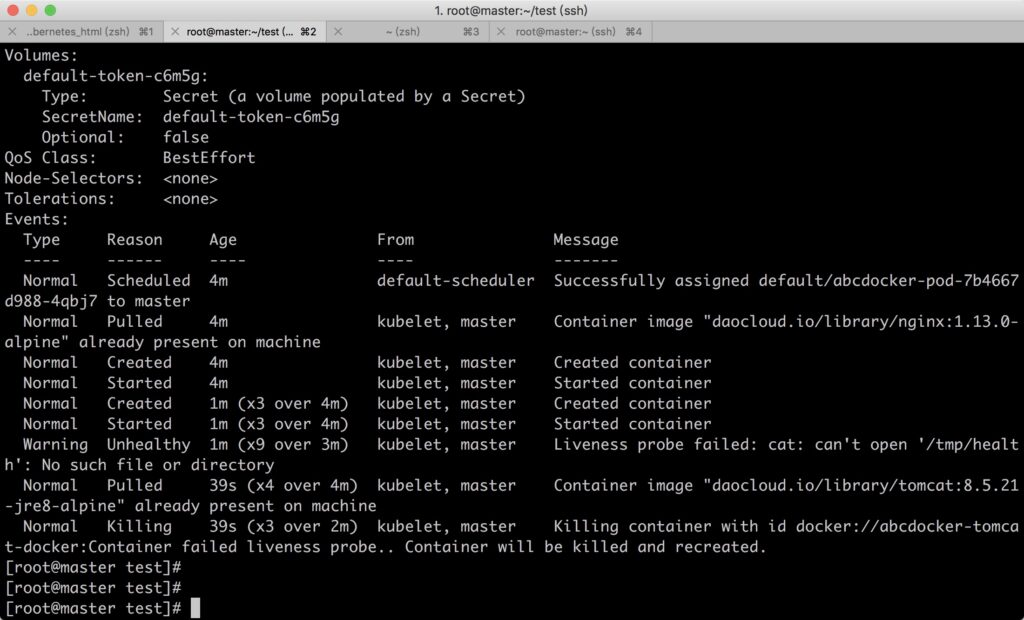

To determine if a container is running normally by executing the “cat /tmp/health” command. After the pod starts running, the file /tmp/health will be deleted after 10 seconds, and the initialDelaySeconds of LivenessProbe health check is set to 15 seconds. The probe result will be Fail, leading kubelet to kill the container and restart it.

[root@master test]# cat docker_pod.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: docker-pod

spec:

replicas: 1

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: docker-nginx-docker

image: daocloud.io/library/nginx:1.13.0-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

command: ["/bin/sh", "-c", "echo ok >/tmp/health; sleep 60;rm -rf /tmp/health; sleep 600"]

livenessProbe:

exec:

command:

- cat

- /tmp/health

initialDelaySeconds: 15

timeoutSeconds: 1

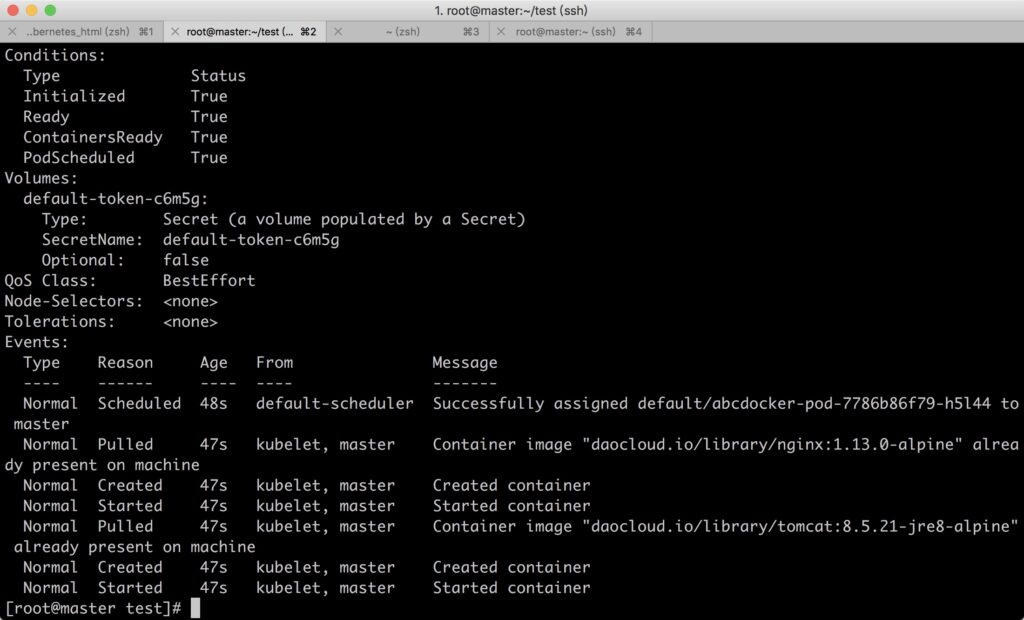

2.TCPSocketAction

Performs a TCP check by attempting to establish a TCP connection using the container’s IP address and port number. If the connection is successful, the container is considered healthy.

[root@master test]# cat docker_pod.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: docker-pod

spec:

replicas: 1

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: docker-nginx-docker

image: daocloud.io/library/nginx:1.13.0-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 1

Example:

Testing fails because the default port is 8080 for the tomcat image, so port 80 cannot be successfully probed.

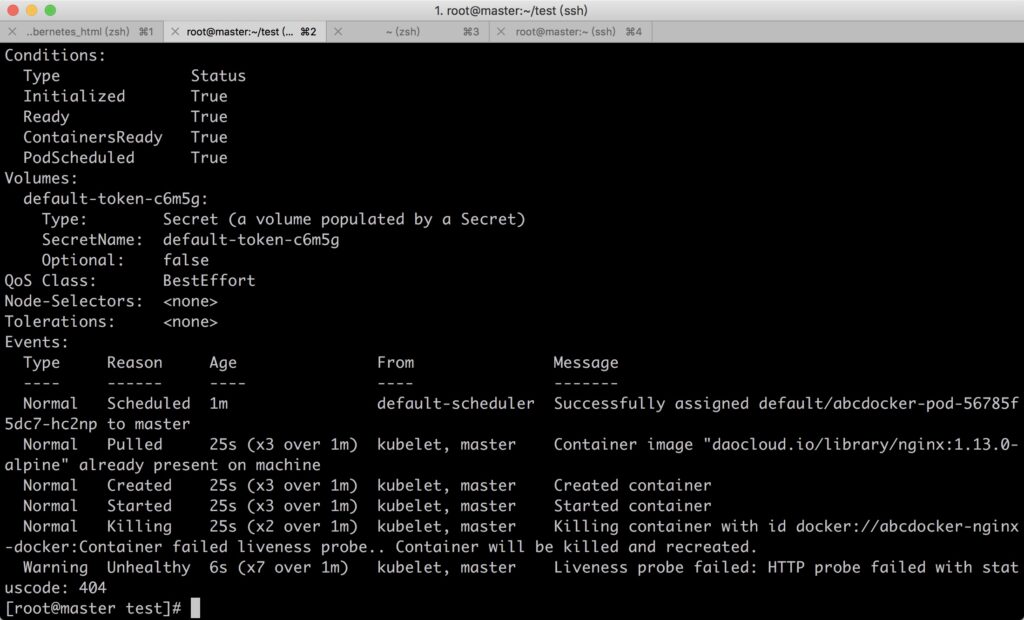

3.HTTPGetAction

Calls the HTTP Get method using the container’s IP address, port number, and path. If the returned status code is greater than or equal to 200 and less than 400, the container is considered healthy.

Example:

[root@master test]# cat docker_pod.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: docker-pod

spec:

replicas: 1

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: docker-nginx-docker

image: daocloud.io/library/nginx:1.13.0-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /docker/

port: 80

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 1

Since the requested path “/docker/” does not exist, the container reports a 404 error and is restarted.

Pod Scheduling

In the Kubernetes system, Pods are mostly just containers’ carriers and usually require objects such as Deployment, DaemonSet, RC, and Job to complete a group of Pod scheduling and automatic control functions.

Deployment/RC: Fully Automatic Scheduling

One of the main functions of Deployment or RC is to automatically deploy multiple replicas of a container application and continuously monitor the number of replicas specified by the user within the cluster.

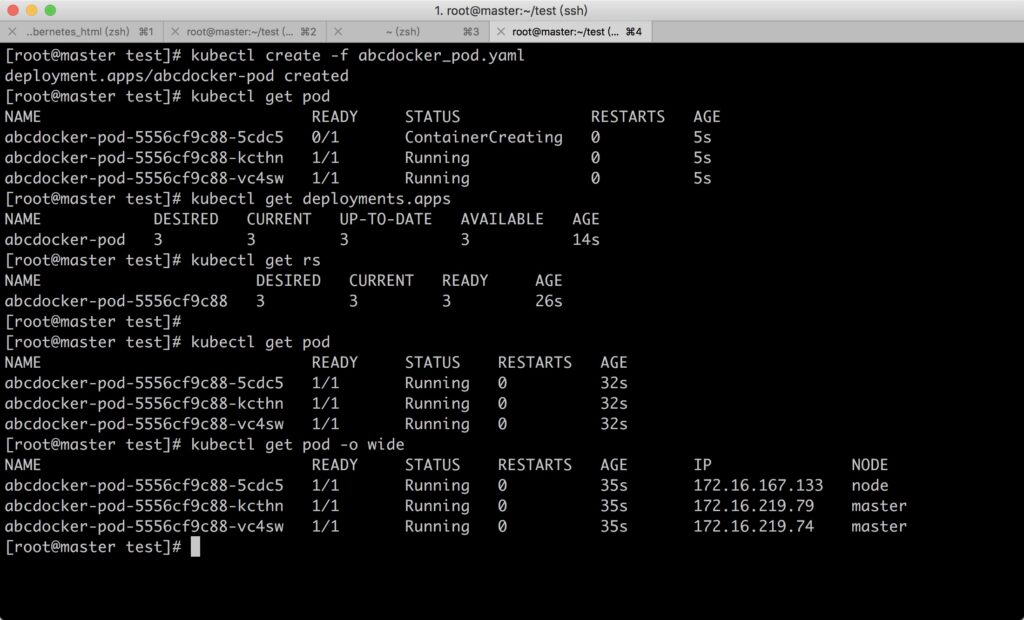

Below is an example of creating a Deployment configuration, which creates a ReplicaSet with three nginx application pods:

[root@master test]# cat docker_pod.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: docker-pod

spec:

replicas: 3

selector:

matchLabels:

app: test

template:

metadata:

labels:

app: test

spec:

containers:

- name: docker-nginx-docker

image: daocloud.io/library/nginx:1.13.0-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Creating process: Since it’s too simple and has been demonstrated earlier, here we only look at the execution results.

From the scheduling strategy perspective, these three nginx Pods are fully automatically scheduled by the system. The final decision on which node each of them runs on is entirely determined by the master’s scheduler through a series of algorithms, and users cannot intervene in the scheduling process or results.

Besides using automatic scheduling algorithms to deploy a group of Pods, Kubernetes also provides rich scheduling strategies. Users only need to use more granular scheduling strategy settings such as NodeSelector, NodeAffinity, PodAffinity, Pod Disruption Budgets, etc., in the Pod definition to achieve precise Pod scheduling.

NodeSelector: Directed Scheduling

The Scheduler service (kube-scheduler process) on the Kubernetes Master is responsible for scheduling Pods

By default, Pods are eligible to run on any node. However, you can use a Kubernetes feature called “NodeSelectors” to constrain which nodes your Pod is eligible to run on based on the labels on the node.

In Kubernetes, nodes are assigned labels with key-value pairs. For example, a node might be labeled as “environment=production” or “disk=ssd”. Nodes can have multiple labels.

Pod can specify a NodeSelector field in its specification. A NodeSelector is a map of key-value pairs. For the Pod to be eligible to run on a node, the node must have each of the indicated key-value pairs as labels.

Below is an example of how to use NodeSelector to schedule Pods to nodes with specific labels:

Create a Pod with NodeSelector:

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

nodeSelector:

disk: ssd

In this example, the Pod nginx will only be scheduled to nodes with the label disk=ssd.

By using NodeSelector, you can influence the scheduling decision of Kubernetes, thus achieving the purpose of Pod deployment strategy.

For a more detailed introduction to Kubernetes scheduling strategy and NodeSelector usage, please refer to Kubernetes official documents.